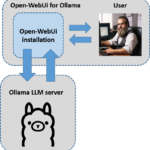

I would like to start my article by briefly describing what Alpaca-LLaMA is for a project. It is an open source project that aims to create a local version of a chatbot based on GPT-3 technology and you can find the project page on the Standford University website here Stanford Alpaca. Unlike other GPT-3-based chatbots that are hosted on cloud platforms and require an internet connection, Alpaca-LLaMA runs on almost any computer without an internet connection. However, it is important that the CPU has 32+ cores and at least 64GB of RAM are installed so that the answers come quickly and one of the more powerful trained neural networks available can be executed. This will then enable the local chatbot to understand natural language and respond to questions posed to it by the user. Alpaca-LLaMA should under no circumstances be used for commercial applications, such as customer support, product description or text creation. Because legally it concerns here a copy of the META LLaMA neural network that without it META wanted landed in the publicly accessible Internet and thus freely and for META was lost. Thus the researchers write to this legal circumstance the following on the project side:

We emphasize that Alpaca is intended only for academic research and any commercial use is prohibited. There are three factors in this decision: First, Alpaca is based on LLaMA, which has a non-commercial license, so we necessarily inherit this decision. Second, the instruction data is based on OpenAI’s text-davinci-003, whose terms of use prohibit developing models that compete with OpenAI. Finally, we have not designed adequate safety measures, so Alpaca is not ready to be deployed for general use.

Nevertheless, this project is a promising approach for the development of chatbots and speech AI technologies that can operate independently of cloud platforms and hopefully, in a positive way, moves global AI research forward faster.

Here is a summary of the key points about the Alpaca-LLaMA project:

- Alpaca-LLaMA is an open-source chatbot based on GPT-3 technology that can run on any computer without an Internet connection.

- Unlike other GPT-3-based chatbots that require an internet connection and are hosted on a cloud platform, Alpaca-LLaMA runs locally on the computer.

- The chatbot is able to understand natural language and respond to questions or queries posed to it.

- Alpaca-LLaMA can be used for various applications, such as customer support, product description or texting but the legal issues of commercial use must then be clarified.

The chatbot can also be used as a tool for creative writing projects or as a personalized writing assistant. - The developers of Alpaca-LLaMA emphasize that their technology is not intended as a replacement for human authors or writers, but as a tool to facilitate and improve the writing process.

- Installing Alpaca-LLaMA requires some technical knowledge and can be somewhat complicated for inexperienced users. With some experience in e.g. Linux and a fast internet connection, the installation is done in about 30 minutes.

- Alpaca-LLaMA is a promising approach for the development of chatbots and speech AI technologies and hopefully advances global research in this area.

- Alpaca-LLaMA ist ein Open-Source-Chatbot, der auf der GPT-3-Technologie basiert und auf jedem Computer ohne Internetverbindung ausgeführt werden kann.

- Im Gegensatz zu anderen GPT-3-basierten Chatbots, die eine Internetverbindung benötigen und auf einer Cloud-Plattform gehostet werden, läuft Alpaca-LLaMA lokal auf dem Rechner.

- Der Chatbot ist in der Lage, natürliche Sprache zu verstehen und auf Fragen oder Anfragen zu antworten, die ihm gestellt werden.

- Alpaca-LLaMA kann für verschiedene Anwendungen eingesetzt werden, wie zum Beispiel für den Kundensupport, die Produktbeschreibung oder die Erstellung von Texten aber die rechtlichen Probleme bei einem kommerziellen Einsatz müssen dann geklärt werden.

- Der Chatbot kann auch als Werkzeug für kreative Schreibprojekte oder als personalisierter Schreibassistent verwendet werden.

- Die Entwickler von Alpaca-LLaMA betonen, dass ihre Technologie nicht als Ersatz für menschliche Autoren oder Schriftsteller gedacht ist, sondern als Werkzeug, um den Schreibprozess zu erleichtern und zu verbessern.

- Die Installation von Alpaca-LLaMA erfordert einige technische Kenntnisse und kann für unerfahrene Benutzer etwas kompliziert sein. Mit etwas Erfahrung im Bereich von z. B. Linux und einer schnellen Internetanbindung ist die Installation in ca. 30 Minuten erledigt.

- Alpaca-LLaMA ist ein vielversprechender Ansatz für die Entwicklung von Chatbots und Sprach-KI-Technologien und bringt hoffentlich die weltweite Forschung in diesem Bereich weiter voran.

Installation – Dalai on Ubuntu (Linux)

For the installation on Ubuntu / Linux it is best to follow the installation instructions on GitHub of the following project:

Dalai Git Hub project pagee: Git Hub – cocktailpeanut

If the installation is started as described there, then a folder dalai is created in the HOME directory of the user. Unfortunately, I had not found a parameter to adjust the path of the installation or to choose freely. So I recommend to create a symbolic link that points to a large and fast drive.

Hint hardware

It is important that the CPU is powerful and that there is enough RAM 64GB+ available. The statement that the whole thing would also work on a Raspberry Pi I do not deny from a technical point of view but whether it is a pleasure for the user I leave open.

Hint software dependencies:

It is important that the versions of Python and Node.js correspond to those described on the project page. Otherwise there will be problems and the project will not work.

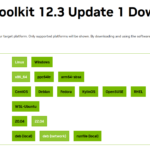

Further it is nicely described on the project page how the different sized neural networks can be downloaded. The following command must be executed with the names of the neural nets listed behind the command. Here is an example to set up all Alpaca nets which will occupy about 74 GB on the target drive.

Command: npx dalai alpaca install 7B 13B 30B 65B

Now it is so that the download of the nets takes some time. If you don’t want to wait that long or if you start with the nets 7B and 13B and then download the nets 30B and 65B a few days later, it can happen that when the command is executed a second time, the already downloaded nets 7B and 13B are deleted. Therefore, whenever a net has been successfully downloaded, save it in an extra folder. Since for me this is a small bug in the downloader, the behavior will be similar with the llama command.

Command: npx dalai llama install 7B 13B 30B 65B

Of course, sufficient space is required on the target drive as well as on the backup drive. This quickly adds up to a total of 400GB+.

Hint backup your nets: As described always save all successfully downloaded nets whether alpaca or llama in an extra BackUp folder so that they are not accidentally lost.

Once everything is set up and installed then Dalai can be started as a framework. This command is also neatly listed in the installation instructions above.

Dalai test

On the project page of Standford University there is a live demo page so that every Dalai can try out the neural alpaca and llama networks. This demo page can be reached via the following URL.

Demo Dalai page: https://crfm.stanford.edu/alpaca/

In the menu of the web interface, you can then directly select the neural network you want to use. For example, alpaca7B or alpaca30B.

If the query window is displayed as shown in the picture above then you can ask your question at the PROMT position. Here I recommend to do this in English even if immediately after the META paper also other languages like German will work. The best results will be achieved with the English language. It is also possible to ask questions like in ChatGPT in the following form: “List 10 points that led to the development of the internal combustion engine.” Then you will get an answer from the alpaca.30B network in this case which is comparable to the one from ChatGPT.

Here now the two answers in the comparison which the two nets supplied.

Dalai Projekt – Alpaca 30B – answers:

- The 10 key events leading up to the invention and successful deployment of an internal-combustion engine are as follows:

- The discovery by John Cugnot, a French inventor who built what is believed to be one of the first self propelled vehicles using steam power.

- The development of gasoline engines by Samuel Morey (US), Etienne Lenoir(France), and Gottleib Daimler (Germany).

- Otto’s four-stroke engine, which was an improvement on previous designs that could only run in two cycles.

- Rudolf Diesel’s development of a compression ignition engine to be used for power generation applications using coal dust as fuel.

- Karl Benz and Gottleib Daimler’s work with the automobile industry, which led to the commercialization of internal combustion engines in cars

- The introduction by Henry Ford of mass production techniques that enabled a huge increase in the number of vehicles produced using ICE technology.

- Robert Goddard’s development and demonstration of liquid-fueled rocketry, which used an engine similar to those found inside internal combustion engines

- The advent of hybrid electric cars, such as Toyota Prius in the late nineties that were able to utilize both petrol or diesel powered ICEs and battery storage for energy.

- Recent improvements by car manufacturers like Volvo who have committed themselves to switching from traditional internal combustion engines (ICE) towards electric vehicles over time

- The development of hydrogen fuel cells, which are beginning to be used in place of ICEs due their greater efficiency and cleaner emissions.

Chat GPT – answers:

- Discovery of the principle of combustion: The concept of combustion dates back to ancient times, but it was not until the 17th century that the principle of combustion was fully understood.

- Development of the steam engine: The steam engine was the first significant step towards the development of the internal combustion engine. It showed that energy could be harnessed and converted into motion.

- Early experiments with gas engines: In the early 19th century, inventors began to experiment with gas engines that used coal gas or other gaseous fuels to power internal combustion engines.

- Invention of the spark plug: The spark plug was invented in the 1860s, and it allowed for more efficient combustion in internal combustion engines.

- Development of the four-stroke cycle: The four-stroke cycle was developed in the 1870s, and it became the basis for most modern internal combustion engines.

- Improvements in fuel injection: The development of fuel injection systems in the early 20th century allowed for more precise control of fuel delivery, resulting in more efficient combustion.

- Use of gasoline as a fuel: Gasoline became the preferred fuel for internal combustion engines in the early 20th century due to its high energy density and availability.

- Advancements in metallurgy: Improvements in metallurgy in the 20th century allowed for the development of stronger, more durable engine components.

- Use of turbochargers and superchargers: Turbochargers and superchargers were developed in the early 20th century, and they allowed for more power to be extracted from internal combustion engines.

- Advancements in electronics and computer technology: In recent years, the use of electronic controls and computer technology has allowed for even more precise control of internal combustion engines, resulting in increased efficiency and performance.

Summary

I cannot say now that I am personally if I understand myself as a user of the Dalai project insanely enthusiastic. Whereby it is valid here to consider two points of view. Firstly, the scientific view of this project and the possibilities associated with it. This is of course great and very exciting how the project will advance the research on AI chat bots. As a user, I would always get the OpenAI ChatGPT Plus account when it comes to using such a tool professionally for work. But even then, the issue of privacy and what information I share with OpenAI is always worth considering. Because even here, there can be privacy challenges very quickly. Therefore, the Dalai project is certainly great to try out something yourself, to work locally with this incredibly exciting technology and to be able to retain data sovereignty.