After I had already installed Ollama as a server and had written one or two Python programs that used the API interface and the underlying language models, Open-WebUi for Ollama aroused my interest. That’s how this article was created, which describes how you could install and use Open-WebUi. In the lower part, after setting up Open-WebUi for Ollama, there is a small first experience report. But now it’s time to install Open-WebUi on an Ubuntu computer.

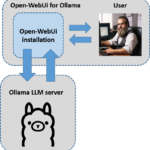

Here is a small view of what the architecture currently looks like on my computer.

You can read more about Open-WebUi here on GitHub: GitHub Open-WebUi

Installation of Open-WebUi

Update the software repository once.

Command: sudo apt-get update

Install Docker and Containerd.io.

Command: sudo apt-get install docker-ce docker-ce-cli containerd.io

Please use the following command to check whether Docker has really been installed and is probably running without errors.

Command: sudo docker run hello-world

Next, test that Ollama is actually running on your computer. To do this, open http://127.0.0.1:11434 in the browser and the following page should appear in the browser.

URL: 127.0.0.1:11434

If Ollama is not running on your computer or you need to install it first, you can find the installation instructions here.

URL: https://ai-box.eu/top-story/ollama-ubuntu-installation-und-konfiguration/1191/

Now build the Open-WebUi container with the following command. I took this from the following Medium post here Ollama with Ollama with Ollama-webui with a fix because the command in the Open-WebUi did not work for me.

Command: docker run -d --network="host" -v open-webui:/app/backend/data -e OLLAMA_API_BASE_URL=http://localhost:11434/api --name open-webui ghcr.io/ollama-webui/ollama-webui:main

With the following command, the open-webui Docker container is also restarted after each system restart.

Command: docker run -d --network="host" -v open-webui:/app/backend/data -e OLLAMA_API_BASE_URL=http://localhost:11434/api --restart always --name open-webui ghcr.io/ollama-webui/ollama-webui:main

Now the WebUi container should be running and you should be able to open Open-WebUi via the following URL.

URL: 127.0.0.1:8080

If the Open-WebUi Ollama interface is to be accessed via the intranet, the URL must be structured according to the following logic.

URL: <IP-Adresse:8080>

Docker Command

Now it took several attempts until the Docker container ran without problems and Open-WebUi connected to the Ollama server. Therefore, I would like to give you the following commands to be able to delete a Docker container that you no longer need or simply want to create a new one.

With the following command, all Docker containers are displayed with their ID

Command: docker ps -a

Now you should first stop the affected Docker container. You can do this with the following command and the ID belonging to the Docker container.

Command: docker stop <angezeigte Docker ID>

Use this command to remove the container.

Command: docker rm <angezeigte Docker ID>

Now you can rebuild the container with a slightly customized command, for example.

Open-WebUi Ollama interface

When the web interface of Open-WebUi Ollama is loaded for the first time, a user must be created.

Important: This user then has admin rights and it is therefore important to remember the login information.

Here is the view after the first registration. Now we need to download one or more language models LLM’s so that we can chat with our data or with the model.

Download language model

Models can be downloaded very conveniently with the admin user via the web interface. This is really great and works without any problems. Once downloaded, the models are immediately available for use.

Select language model

Each user can now select the downloaded language models and work with them. This works really well.

Upload data such as PDF files

It is also possible to upload data in the form of PDF files, for example. You can then interact with these in the chat window after the upload. This works really well and is a lot of fun. It worked best with catalogs. If you upload several files, such as the Mercedes Benz annual report, the language model I use or the Open-WebUi solution probably reaches its limits when interacting with the PDF files.

Here is a picture of the menu before I uploaded the PDF files.

After uploading approx. 30 PDF files, the window will look as shown below.

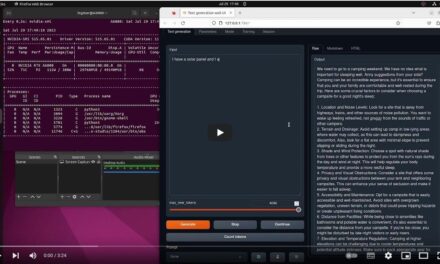

I then switched to chat mode and used “#” to add all the PDF files I wanted to chat with to the chat. You can see the result in the picture.

Open-WebUi Prompt Community

I also find it very exciting to be able to create a prompt template that tells the LLM used how it should behave according to a certain style. It is therefore worth taking a look at the following URL and creating exactly such a template for recurring tasks such as generating marketing texts. You could create a prompt template for a marketing expert and work with it.

URL: https://openwebui.com/?type=prompts

I have built a prompt that acts as a kind of assistant for a call center and requests personal information from the customer that is needed to conclude a loan agreement. The prompt template looks like this.

Summary

I had a lot of fun installing Open-WebUi for Ollama and trying out a few things with it. Chatting with PDF files also works well as long as you don’t start too complex requests. But here, too, I need to do a lot more work on the solution. I have also created a prompt template that works quite well and has given me a lot of pleasure. However, it still needs some improvements for professional use. But to generate a few nice texts for Twitter & Co. I found it sufficient. A lot depends on the underlying language model and what it can do. I think it will certainly get a lot better soon.