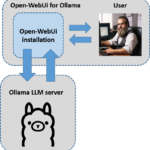

I always wanted to try a Llama model of META and with the release of Llama 2 it was then so far that I went on the search how I can set up this on my computer locally. There are several guides but the best framework I could find was oobabooga which is also opensource. After I decided on the framework I had to decide which Llama 2 model to test. I decided to use the model Llama-2-13B-Cha, because it fits into the memory of my NVIDIA A6000 graphics card. I signed up with META to get access to the current Llama 2 models. Because that way I could then download them from Hugging Face. In the end I chose the model TheBloke_Llama-2-13B-Chat-fp16 which I could easily download via the web interface of the oobabooga framework.

But now enough introduction and let’s start with the description of the installation of the necessary components.

Installation oobabooga

I have installed the oobabooga framework on an Ubuntu system and use as written in the beginning a NVIDIA A6000 graphics card in which I can load the Llama 2 model.

Here is the GitHub page that leads you to the framework.

GitHub: https://github.com/oobabooga/text-generation-webui

Create Anaconda environment

The installation of the oobabooga framework is done in a separate Anaconda environment. So we can separate this installation and the necessary packages from the other installations. Now please execute the two following commands.

Command: conda create -n Llama_2_textgen python=3.10.9

Now the Anaconda environment with the name Llama_2_textgen is created and can be started as shown below.

Command: conda activate Llama_2_textgen

Hint: If Anaconda is not yet installed on your system you can read here how to install Anaconda under Ubuntu.

Install Pytorch

For everything to run smoothly you need to install torch and a few packages. You do this with the following command.

Command: pip3 install torch torchvision torchaudio

Download oobabooga framework

Command: git clone https://github.com/oobabooga/text-generation-webui

Command: cd text-generation-webui

Command: pip install -r requirements.txt

bitsandbytes installation

Command: pip install bitsandbytes==0.38.1

I also had to install the following additional modules in my CONDA environment.

Command: pip install idna

Command: pip install certifi

Command: pip install pytz

Command: pip install six

Command: pip install pyyaml

Command: pip install pyparsing

Command

After that, I was actually able to launch the application so the web UI.

Starting the oobabooga web interface

Command: conda activate Llama_2_textgen

Command: cd text-generation-webui

Command: python server.py

Download LLM Models

On the oobabooga web interface, in the Model tab, enter the name on the right in the Download custom model or LoRA item and tap download. Now the corresponding model of Hugging Face will be downloaded and set up. The following model TheBloke/Llama-2-13B-Chat-fp16 I could load locally with my NVIDIA A6000 without any problems.

Model Name: TheBloke/Llama-2-13B-Chat-fp16

The model occupies about 29 GB of memory on the graphics card. But that probably also means that the 30B model, when it is available, probably can’t be loaded without further ado.

If you want to calculate which model fits maximum in the memory of your graphics card then I would like to explain below how this calculation works on the example of a 70B model.

Number of parameters = 70.000.000.000

32 bit of information = 32 bit

1 byte = 8 bit

Calculation: (70.000.000 * 32) / 8 = 280.000.000 byte = 280 GB

With this we would need a video RAM of a size of 280GB GPU RAM to load the 70B model.

If you don’t want to do the calculation yourself, you can use this parameter calculator to do it.

URL: https://kdcreer.com/parameter_calculator

I tried to load the 70B model partly into the video RAM of my graphics card and partly onto a fast M2 SSD but the response time of the network was unbearably slow for a human.

The significantly large model with TheBloke/Llama-2-70B-Chat-fp16 did not fit into the memory of my NVIDIA A6000 which is simply too small with 48GB RAM.

Model name: TheBloke/Llama-2-70B-Chat-fp16

Starting the web interface – extended call

If you want oobabooga to start automatically and start with some preset parameters like the model to load then the command looks like this. How to start the whole thing as a cron job I have explained on my blog here a few times and here you can find an explanation that you can use from the principle.

Command: python server.py --chat --multi-user --share --model TheBloke_Llama-2-13B-Chat-fp16 --gradio-auth <User-Name>:<Password>

Video

I also recorded a small video that shows how fast the model responds on my computer. I found it very impressive and was thrilled.

Update the installation

If you want to update your installation of oobabooga you have to execute the following commands.

Command: conda activate Llama_2_textgen

Command: cd text-generation-webui

Command: pip install -r requirements.txt --upgrade

Summary

I thought that it is more difficult to get Llama 2 running locally on my computer. But the oobabooga framework does a lot of work for me. I had some difficulties to get the framework installed. But in the end it all worked out and now it runs just great and I can test the model a bit and think about what I could use it for. On the whole I am very satisfied and will go deeper into the topic of large language models.