In terms of technical requirements and architecture, this solution is not very complex and is therefore ideal for getting started with large language models. It is also guaranteed to give you an initial sense of achievement very quickly. All the components used can be easily installed and combined with each other. I have followed the description below and have added a few more pieces of information that were important to me.

Source: https://blog.duy-huynh.com/build-your-own-rag-and-run-them-locally/

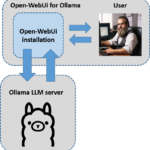

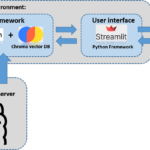

In the article “Ollama Ubuntu installation and configuration” we have already installed Ollama. Now I would like to briefly explain the architecture of the application that we are now going to build. The following image briefly shows the structure. We have the LLM server Ollama and the virtual environment in which all the components are installed that our RAG application needs so that we can chat with a PDF file. Streamlit provides the user interface. We also have the user who has to upload a PDF file in order to be able to interact with it.

Video Introductory course

To familiarize yourself with the basic principles of a retrieval-augmented generation (RAG) application, I recommend watching the following videos at your leisure.

- RAG From Scratch: Part 1 (Overview)

- RAG From Scratch: Part 2 (Indexing)

- RAG From Scratch: Part 3 (Retrieval)

- RAG From Scratch: Part 4 (Geneartion)

My article here goes straight into the development of the application and does not go into why something is done and how. I would like to mention once again that in this RAG application the data is not transferred to the Internet but everything runs locally on your computer.

Software installation

I always set up a virtual Anaconda environment for my projects. That’s what I’m doing here and if you haven’t installed Anaconda on your Ubuntu yet you can read here how to set up Anaconda.

URL: https://ai-box.eu/software/installation-von-anaconda-auf-ubuntu-lts-version/1170/

The following command creates an Anaconda environment with the name ollama_rag.

Befehl: conda create --name ollama_rag

You still need to activate the newly created environment. To do this, please execute the following command.

Befehl: conda activate ollama_rag

Now please install the extensions with the following command in the virtual environment ollama_rag.

Befehl: pip install langchain langchain-community chromadb fastembed streamlit streamlit_chat

Error messages:

After installing the packages as described above, I received the following error messages. I will now continue to see how relevant these are. Just as an example, the fact that openai>=0.26.4 is not installed should not be a problem for the further progress of the project.

ERROR: pip's dependency resolver does not currently take into account all the packages that are installed. This behaviour is the source of the following dependency conflicts.

llama-index 0.6.12 requires openai>=0.26.4, which is not installed.

auto-gptq 0.3.0+cu117 requires datasets, which is not installed.

transformers 4.26.1 requires tokenizers!=0.11.3,<0.14,>=0.11.1, but you have tokenizers 0.15.1 which is incompatible.

llama-index 0.6.12 requires typing-extensions==4.5.0, but you have typing-extensions 4.9.0 which is incompatible.

clip-interrogator 0.6.0 requires transformers>=4.27.1, but you have transformers 4.26.1 which is incompatible.

auto-gptq 0.3.0+cu117 requires transformers>=4.29.0, but you have transformers 4.26.1 which is incompatible.

The program code

This small application consists of two Python files. One represents the logic for the interaction with the large language model mistral via the Ollama server. The other Python file represents the user interface and is based on the Python program with the logic. I have created a folder with the name rag and placed the following two Python programs in it.

You can find the original program here on GitHub.

URL: https://gist.github.com/vndee/7776debe50b5e6c2b174add8646a4625

Copy the following source code into a Python file with the name rag.py.

from langchain.vectorstores import Chromafrom langchain.chat_models import ChatOllamafrom langchain.embeddings import FastEmbedEmbeddingsfrom langchain.schema.output_parser import StrOutputParserfrom langchain.document_loaders import PyPDFLoaderfrom langchain.text_splitter import RecursiveCharacterTextSplitterfrom langchain.schema.runnable import RunnablePassthroughfrom langchain.prompts import PromptTemplatefrom langchain.vectorstores.utils import filter_complex_metadataclass ChatPDF: vector_store = None retriever = None chain = None def __init__(self): self.model = ChatOllama(model="mistral") self.text_splitter = RecursiveCharacterTextSplitter(chunk_size=1024, chunk_overlap=100) self.prompt = PromptTemplate.from_template( """ <s> [INST] You are an assistant for question-answering tasks. Use the following pieces of retrieved context to answer the question. If you don't know the answer, just say that you don't know. Use three sentences maximum and keep the answer concise. [/INST] </s> [INST] Question: {question} Context: {context} Answer: [/INST] """ ) def ingest(self, pdf_file_path: str): docs = PyPDFLoader(file_path=pdf_file_path).load() chunks = self.text_splitter.split_documents(docs) chunks = filter_complex_metadata(chunks) vector_store = Chroma.from_documents(documents=chunks, embedding=FastEmbedEmbeddings()) self.retriever = vector_store.as_retriever( search_type="similarity_score_threshold", search_kwargs={ "k": 3, "score_threshold": 0.5, }, ) self.chain = ({"context": self.retriever, "question": RunnablePassthrough()} | self.prompt | self.model | StrOutputParser()) def ask(self, query: str): if not self.chain: return "Please, add a PDF document first." return self.chain.invoke(query) def clear(self): self.vector_store = None self.retriever = None self.chain = Nonerag.py file. I also copied this from the following project on GitHub.rag-app.py.import osimport tempfileimport streamlit as stfrom streamlit_chat import messagefrom rag import ChatPDFst.set_page_config(page_title="ChatPDF")def display_messages(): st.subheader("Chat") for i, (msg, is_user) in enumerate(st.session_state["messages"]): message(msg, is_user=is_user, key=str(i)) st.session_state["thinking_spinner"] = st.empty()def process_input(): if st.session_state["user_input"] and len(st.session_state["user_input"].strip()) > 0: user_text = st.session_state["user_input"].strip() with st.session_state["thinking_spinner"], st.spinner(f"Thinking"): agent_text = st.session_state["assistant"].ask(user_text) st.session_state["messages"].append((user_text, True)) st.session_state["messages"].append((agent_text, False))def read_and_save_file(): st.session_state["assistant"].clear() st.session_state["messages"] = [] st.session_state["user_input"] = "" for file in st.session_state["file_uploader"]: with tempfile.NamedTemporaryFile(delete=False) as tf: tf.write(file.getbuffer()) file_path = tf.name with st.session_state["ingestion_spinner"], st.spinner(f"Ingesting {file.name}"): st.session_state["assistant"].ingest(file_path) os.remove(file_path)def page(): if len(st.session_state) == 0: st.session_state["messages"] = [] st.session_state["assistant"] = ChatPDF() st.header("ChatPDF") st.subheader("Upload a document") st.file_uploader( "Upload document", type=["pdf"], key="file_uploader", on_change=read_and_save_file, label_visibility="collapsed", accept_multiple_files=True, ) st.session_state["ingestion_spinner"] = st.empty() display_messages() st.text_input("Message", key="user_input", on_change=process_input)if __name__ == "__main__": page()Run the RAG Chat application program

Now change to the rag folder on your computer in the console and execute the Python file rag-app.py with the following command.

Befehl: streamlit run rag-app.py

If you now call up the IP address with port 8501 in the browser, the web interface of the small application should open.

URL: <Eure IP-Adresse>:8501

My web interface now looks like this.

Now you have to upload a PDF file.

First tests and chat attempts

For the first attempt I downloaded a travel guide about NewYork. You can find it here.

URL: https://guides.tripomatic.com/download/tripomatic-free-city-guide-new-york-city.pdf

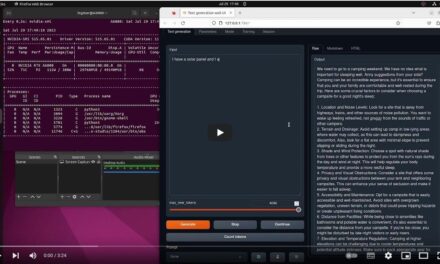

Now load the file into the small app and wait briefly until the vector DB is set up. Then I have asked the following question which should be able to be answered from the PDF file.

Frage: “I need your help as an travel guide for NewYork. I woul like to visit NewYork in March. Please tell what going on in NewYork in March.”

The answer that came back was correct and can be found exactly as it is in the PDF file.

Creating text embeddings – background knowledge

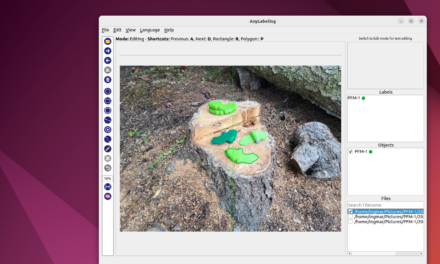

Now I would like to briefly discuss a very important point with you about this RAG Chat application. The PDF file is broken down into text embeddings and saved as vectors in the Chroma vector DB. Now creating the vectors is not so easy as they should ideally contain sections of the text that are linked together. If the text is split up rather unfavorably, the RAG application may not deliver good results. To help you visualize this better, Greg Kamradt has created the following mini-application which allows you to visualize the cutting of the text into text embeddings.

URL: https://chunkviz.up.railway.app/

In the rag.py program, the text is split using the RecursiveCharacterTextSplitter method and stored as a text embedding with a length of 1024 characters with an overlap of 100 characters as vectors.

self.text_splitter = RecursiveCharacterTextSplitter(chunk_size=1024, chunk_overlap=100)I copied the overview with the events in NewYork from the PDF file and pasted it into ChunkViz. Depending on the settings, ChunkViz then generates an overview of how the chunks would be created and this then looks as shown below. It is also interesting to see that the parameter chunk_overlap=100 is probably not used in the RecursiveCharacterTextSplitter method.

But just try out for yourself how you can best transfer your text to the vector DB.

Hierarchical Contextual Augmentation

For those who found this excursion interesting, I recommend reading the following paper “A Hierarchical Contextual Augmentation RAG for Massive Documents QA“.

The paper discusses the limitations of the traditional RAG (Retrieval-Augmented Generation) approach to accurate information retrieval in large documents with text, tables and images such as a HomeDepot product catalog or the Makita tool catalog. To overcome these challenges, the paper presents a hierarchical contextual augmentation (HCA) approach and introduces the MasQA dataset for evaluating multi-document question answering (MDQA) systems.

The HCA approach consists of three main steps:

- Markdown Formatter: Uses Language Model (LLM) to analyze documents in Markdown format and treats each chapter as a first-level heading with a numeric identifier. It also generates tables and extracts images using PDFImageSearcher.

- Hierarchical Contextual Augmentor (HCA): Processes structural metadata, converts segments into embedding vectors and embeds captions generated by Very Large Models (VLMs). Data fields within tables are omitted during embedding.

- Multi-way search: Combines vector search, elastic search and keyword matching to improve the precision of information searches.

The evaluation of the approach introduces the Log-Rank Index measure to assess ranking effectiveness. The MasQA dataset includes a variety of materials, including technical manuals and financial reports, with a diverse selection of question types such as single and multiple choice, descriptive, table and calculation questions.

- The scientific paper is available here: A Hierarchical Contextual Augmentation RAG for Massive Documents QA

- The software is available here on GitHub: Hierarchical Contextual Augmentation RAG for Massive Documents QA

Summarizing a text with LangChain and Ollama and StableLM 2

Another interesting project that I would like to try out myself is this one: ollamalangchainsummary.py

- Setup:

- My MacBookPro M3Max with 48 GB GPU.

- Ollama as Language Model host.

- Stability AI’s StableLM.

- LangChain as the underlying toolbox.

Video Kurs – Advanced QA over a lot of Tabular Data (combine text-to-SQL with RAG)

Summary

I really enjoyed building and developing this small RAG chat application with the Ollama server and the two Python programs. Compared to August 2023 and now February 2024, the development is already much further along and building such small applications together with one of the large language models is a lot of fun. It is also no longer so difficult to get everything up and running. There are lots of up-to-date videos on YouTube and instructions on GitHub & Co. So I’m happy about what a great time I’m living in and will be trying out a few more things here.