The knowledge and hardware requirements for using the No-Code AI pipeline are not particularly high. Also, this guide will take you step by step through all aspects of hardware acquisition, software installation and operation of the necessary tools. When writing this guide I assumed that there is no previous knowledge of AI but a strong interest and a strong will to implement the project, working with neural networks. The software solutions presented here do not make great demands on the hardware to try them out yourself. But if you want to do more than just try it out, it is worth to take some money in your hand and to build or buy a suitable computer. But the most important thing for this project is to be curious about the topic No-Code AI Pipeline and to have the courage to start.

It is helpful to have some experience with Linux and especially with the Ubuntu operating system. But even if you have no previous knowledge, the installation of the No-Code AI Pipeline will certainly work. Because all steps are explained in detail and can be adopted 1:1. It is only important to stick to the order and not to omit anything.

For the hardware, it would be good to use a computer with current hardware that should not be older than 3 years (I wrote this manual at the end of 2021). On this computer first of all no particularly high requirements are made so that the software generally once runs. It can be a desktop computer or a notebook. Of course, in the case of training neural networks, it is a great advantage if an NVIDIA GPU is installed, which accelerates the complex training. So you can try out different networks and training parameters faster without having to wait many hours or days between the different trainings. The graphics card can also be a bit older and does not have to be a current model.

Introduction to the topic of hardware

For the installation and configuration of the software, no special requirements are placed on the hardware, as already explained at the beginning. I personally had the opportunity to set up and test the AI Pipeline on different systems. The experiences gathered in this way are always incorporated into this manual through continuous updates.

What does a simple entry-level system with GPU cost me?

To build an entry-level system with an NVIDIA graphics card, a good CPU, RAM and a fast hard disk for data storage, you’ll have to spend around €2,800. The prices for hardware components currently fluctuate strongly. Pre-built complete systems with current NVIDIA graphics cards are usually cheaper than individually built systems. For example, large discounters offer gaming computers that can be used with AI Pipeline for less than 2.400,- € with e.g. an NVIDIA RTX 3070 graphics card.

What is important when choosing hardware?

In general, I would like to address a few points here that apply to both a laptop computer and a high-performance desktop computer. I’ll try to address the typical questions that everyone who wants to buy such a system for neural networks should ask.

Choice of CPU and GPU:

Which CPU is chosen also depends on whether a GPU will be installed in the computer or not. A powerful CPU or an NVIDIA GPU is only advantageous for training neural networks. If no GPU is installed, then the CPU should be maximally powerful. If a GPU is used, then a CPU with good performance data should be installed, since the training of the neural networks then takes place on the GPU and the CPU only has to transport the data from the hard disk to the GPU. In this case, the GPU should have the appropriate performance and the available memory should not be too small (24 GB). This means that one has to be clear about the future use of the system before the purchase. If neural networks are often trained by several users, for example, then an RTX3090 or A6000 should definitely be installed as the GPU. The latter offers the possibility to schedule several neural network trainings in parallel with its 48 GB of working memory.

Main memory:

For a better explanation, the RAM of the computer is divided into system memory and GPU memory. If no GPU is used, at least 64 GB of system memory should be installed and if possible from current modules of the DDR4 4400 class. If a GPU is used, then the RAM should be at least 32 GB and the installed motherboard with its memory modules should offer the possibility to expand the system memory if the need arises. If the system is used by several people at the same time and a GPU is available for training the neural networks, then the installed GPU memory on the graphics card should be at least 24GB to 48GB. This avoids bottlenecks that can occur during parallel training of the neural networks if the memory has already been occupied by an active training alone.

Mass storage:

For the installation of the operating system, a normal SSD hard disk is sufficient, which should be about 500GB in size. Here, it should be a brand product with a correspondingly good read speed. Except for the operating system and possible software installations, the SSD hard disk will not come into contact with neural network training. Therefore, performance is not quite as much of a focus here.

A fast NVMe drive should also be installed for managing the training data and feeding it to the CPU or GPU. Depending on how many users use the system, i.e. how many projects are managed, at least 2 TB should be installed. Here again, the maximum read speed is important, which should be as high as possible with approx. 7,000MB/s. Of course, this depends on the current hardware prices and the available budget. After all, the point is that the training data can be transferred to the graphics card or processor as quickly as possible. Not saving here brings the advantage that the training data is on the NVMe drive instead of the SSD hard drive and can thus be transferred back and forth between CPU/GPU and NVMe drive as fast as possible.

Energy consumption

Depending on the computer’s configuration, the power consumption can be correspondingly high. I would differentiate here between the system’s idle and then the energy consumption under full load when training neural networks. The energy consumption of a desktop computer with a Threadripper CPU and two RTX 3090s that are water-cooled is 600 watts in idle and quickly reaches 1200 watts under full load. If you haven’t installed a smart meter in your house as an electricity meter yet, the high and loud whistling that you might hear or have to explain to your life partner comes from the rotating disk in the electricity meter…

Note: Use secondary energy

This can be used very well around:

- Quickly dry laundry, shoes, children’s watercolor paintings, snowsuits, etc. or just to make dried fruit for extended relatives, etc.

- different types of dough rise faster in the server or dough walking room (please cover the dough with a damp cloth so that it is not air-dried on the surface in the short time – already tested for you) and from this also the whole family has something.

- also such a system is ideal in the winter to warm the laundry-ironing room to be able to dry, for example, the hung up laundry faster.

- etc.

My experiences that I have collected over the past years, I have tried to summarize in the following hardware recommendations on the essentials. So I give a lot of hints and tips in the hope that they will help you to get started with artificial intelligence.

Note: It is important to consider in advance exactly how the system is to be used. It is also important to clarify whether several users should access the network in order to train neural networks or whether this system is more intended for playing computer games in private use, etc.

Heat generation and cooling

The heat generation of such a high performance computer should not be underestimated and especially the effort that has to be done to lead the heat out of the device. Therefore, first of all, a cool environment in which the computer is operated would be highly recommended. The case may also be a bit larger to have enough volume for air, the installed fans and radiators. If a more demanding water cooling system is used, a large case like the Fractal Design – Delfine XL pays off even more.

A purely air-cooled system can be seen in the following picture, which has two powerful Quadro RTX 8000 graphics cards. When the two NVIDIA Quadro graphics cards are fully loaded, they alone generate a noise level that doesn’t allow this system to be used in an office under a desk. This system as a whole cannot be used in an office because the volume of the two power supplies and four high-performance fans clearly exceeds the acceptable noise level of a human being.

A water-cooled system is significantly quieter than a purely air-cooled system.

The following pictures were provided by the company MIFCOM[1] to show in this series of articles how professionally built Deep Learning workstations with water cooling can look. The main purpose of the pictures is to show how technically complex a professionally built water cooling system is. For me as a layman the construction of a water cooled system would be too demanding.

The following picture shows the prepared heatsinks for a 4 GPU water cooling system.

Assembled for a test run, still without GPUs, to test the tightness of the cooling system, it can be seen very well that the space in a normal bigtower would quickly become tight. The cooling pipes are seen in the demand area, which makes a tower deep enough to install such a professional cooling system necessary.

A ready-built water-cooled system from MICOM looks like the picture below. Very good to see are the radiators that were installed in the bottom, top and front with a variety of fans. In the front of the case you can see the expansion tank, which also shows the level of the coolant. Thus, it can be quickly checked at a glance whether the cooling is intact.

In this article I tried to go into the basic considerations of which system is best suited for the respective application and hopefully also gave the appropriate questions that should be asked before such a system is purchased. In the following article AI Pipeline – Hardware Example Configurations I will present several ready-made complete systems as well as my own that I have built myself.

Article Overview - How to set up the AI pipeline:

AI Pipeline - Introduction of the tutorialAI Pipeline - An Overview

AI Pipeline - The Three Components

AI Pipeline - Hardware Basics

AI Pipeline - Hardware Example Configurations

AI Pipeline - Software Installation of the No-Code AI Pipeline

AI Pipeline - Labeltool Lite - Installation

AI Pipeline - Labeltool Lite - Preparation

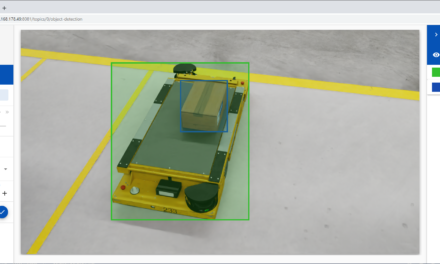

AI Pipeline - Labeltool Lite - Handling

AI Pipeline - Tensorflow Object Detection Training-GUI - Installation

AI Pipeline - Tensorflow Object Detection Training GUI - Run

AI Pipeline - Tensorflow Object Detection Training GUI - Usage

AI Pipeline - Tensorflow Object Detection Training GUI - SWAGGER API testing the neural network

AI Pipeline - AI Pipeline Image App Setup and Operation Part 1-2

AI Pipeline - AI Pipeline Image App Setup and Operation Part 2-2

AI Pipeline - Training Data Download

AI Pipeline - Anonymization-Api

[1] MIFCOM and my employer have a business relationship and thus a regular exchange on high-performance computers for machine learning in daily office use. The experience gained and the proven computer configurations can be found in this series of articles.

The tutorial offers a clear and practical guide for setting up and running the Tensorflow Object Detection Training Suite. Could…

This works using an very old laptop with old GPU >>> print(torch.cuda.is_available()) True >>> print(torch.version.cuda) 12.6 >>> print(torch.cuda.device_count()) 1 >>>…

Hello Valentin, I will not share anything related to my work on detecting mines or UXO's. Best regards, Maker

Hello, We are a group of students at ESILV working on a project that aim to prove the availability of…