After training the first 31 models for anti-personnel mine detection, my data set has grown significantly. The goal was to reduce the false positives. This was because there were always leaves detected that were similar to a PFM-1 anti-personnel mine and were falsely detected as such. In order for the YOLOv5 network to learn that leaves should not be detected as mines, a larger number of images of grass, trees, bushes, raspberry plantations etc. were mixed into the training data. Furthermore, a dataset showing synthetic images of PFM-1 mines from a drone’s point of view was mixed in. Thus, thanks to this dataset, the perspective could be extended to the top-down view of a drone on PFM-1 mines. Furthermore, existing image information was cleaned and enriched to reduce typical features of the PFM-1 mine that had become too prominent to allow for more general detection. It is normal that the PFM-1 mine can only be recognized in parts and still has to be recognized. If then a characteristic predominates with the recognition which can be possibly covered leads to a not recognizing of the mine.

In summary, the data generated in this way has had great success in optimizing the training data and significantly improving the results.

Preparation of the training data

The training data is based on 326 PFM-1 images that I searched for on the internet, rendered in Blender and then processed. During the preparation process, I used Segment-Anything to crop the images and partially removed prominent marks from the images of the PFM-1 mines.

From these images prepared in this way, I created a total of 3,912 images by rotating them and making parts of the mines transparent. These so-called foreground images were placed on a total of 1,707 background images. Always four foreground images were placed on a background image in a size of 3% to 5% of the background image. The position of each foreground image was randomly determined. A label file conforming to the YOLO format was created for each image.

The background images were composed of different photos. For example, images from meadows, department stores, parks, industrial plants, beaches, aquariums, etc. in order to also get an appropriate noise in the synthetically generated image for the training of the neural network.

In addition, background images generated with Stable-Diffusion were created with prompts like Gothic-Industrial or Warzone. But also prompts on the theme of opencast mining / industrial plants delivered quite useful results. Accordingly, the background images were very diverse and in total 1,707 background images were used.

After the images were created, they were further processed as a whole. This included saving the color images as grayscale images. Then a blurr filter was applied to the color and gray tone images. So a slight blur was brought into the training images.

The approx. 800 drone images were only converted to grayscale images and processed with the blurr filter. No further adjustments were made here.

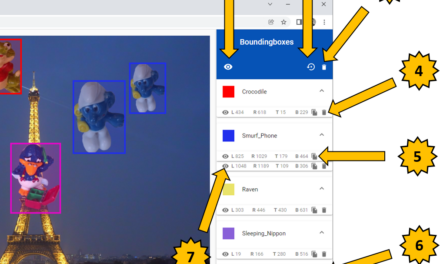

The following picture shows four PFM-1 mines in a meadow. This is what all the images used for the training looked like.

In total, 108,224 records or 56.1 GB of data were generated using this method. These were divided for the training of the YOLOv5m network as shown in more detail in the following section.

Training data overview

The overview shows roughly how the training data was structured without going into the details of how it was created. In total, the training data was divided into five folders.

- 20230527_negative

- train (200 images)

- val (13 images)

- 20230529_training_data

- train (41.997 images)

- val (6.000 images)

- test (11.999 images)

- drone_rgb

- train (6.822 images)

- val (976 images)

- test (1.949 images)

- drone_gray_scale_rgb

- train (6.822 images)

- val (976 images)

- test (1.949 images)

- drone_blurred_rgb_gray_scale_rgb

- train (17.787 images)

- val (3.701 images)

- test ( 7.033 images)

Afterwards, the model was trained with 200 EPOCH for about 26 hours on my NVIDIA A6000.

Evaluation

According to the data preparation, the EXP35 model trained in this way had never seen real images of the PFM-1 mine but only synthetically created images. Only during the evaluation the freely available data set was shown to the finished model.

Evaluation Dataset: https://app.roboflow.com/synthetic-data/bing/2

The result of the evaluation looked like this and I was able to achieve a 0.819 mAP initially:

Validation curves

|

|

Validation Batch Labels

FPM-1 Mine Detector Video

The video shows a 3D printed PFM-1 anti-personnel mine that I filmed at different locations. The EXP35 net was used here for the detection of the mine. It is easy to see in this video that hardly any leaves are detected as PFM-1 mines.

Summary

With the current version of the YOLOv5m net in the version EXP35 I am so far content that my considerations with the optimization of the training data show successes. It is still a long way to a really stable net but the basis is created and the success achieved so far with really small means gives me confidence. I am curious to see how far I can go with the synthetic data. The goal is to train a neural network that can recognize a variety of mines and not only the difficult to recognize PFM-1 mine.